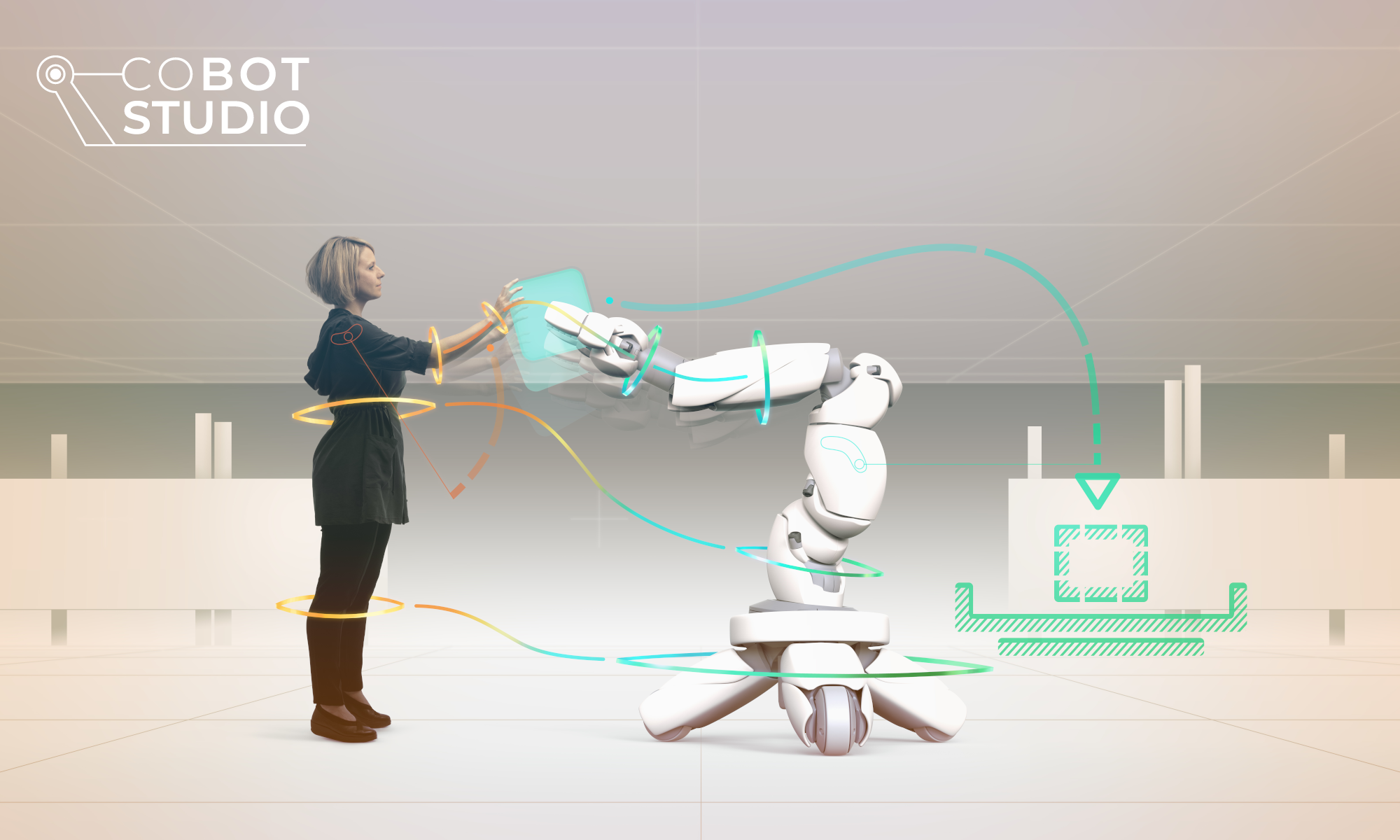

In the coming years, more and more workplaces will be equipped with collaborative robots (CoBots) that hold workpieces for employees, assemble car seats together with them, inspect packages or work physically close to people in other ways.

Understanding the states and next steps of such a robot is not only important for the successful completion of joint tasks, but also plays a major role for the acceptance by the human interaction partners. After all, safety and trust are basic human needs built on mutual understanding. For human-robot collaboration, this means that just as the states and intentions of the human partner must be identifiable for the robot, so, conversely, the states and planned actions of the robot should also be understandable and predictable for the human being.

Ideally, when a CoBot will actively intervene in the joint work process or in which direction it will move next can be understood intuitively. However, there is still great need for research to specify which robotic communication signals are understandable and pleasant for which group of people in which work context.

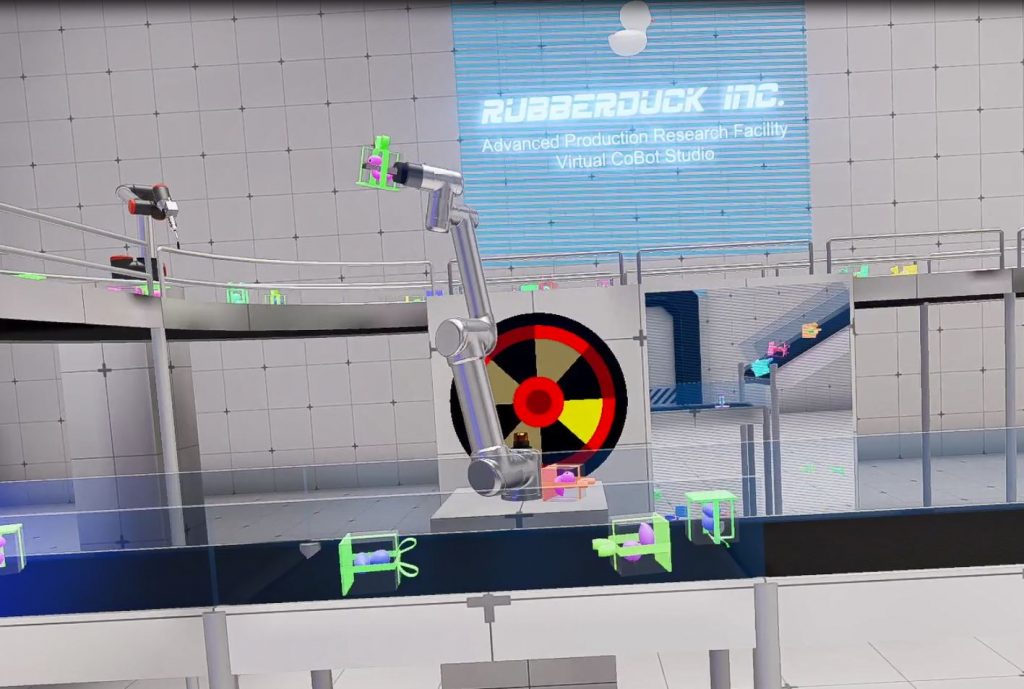

This is where the interdisciplinary consortium of the CoBot Studio comes in with new, creative methods. The project focuses on the development of an immersive extended reality simulation in which communicative collaboration processes with mobile robots can be playfully tried out and studied under controllable conditions.